Latest News and Updates! A lot has been happening this last month!

Compel Prompts

Until now we used A111-style prompt weightings, for regular, (more), [less], ((even more)), [[[much less]]], (some concept: 1.5), etc. We’ve now switched to Compel style weights, which are simpler and offer greater flexibility. It’s the same prompt weighting style used by InvokeAI.

See the Compel Syntax Docs for more information, including examples and pictures, but in short… regular, more+, less-, (even more)++, (much less)--- and (some concept)1.5 (note: here the weight goes outside the parenthesis, and without a colon (“:”)).

The more exciting features are Blends and Conjunctions. Conjunctions (.and()) help separate phrases (e.g. with ("a blue hat", "a red rose").and(), the colours will actually land up in the right places :)) For more info and examples see the syntax docs above. Likewise for Blends, but here’s a quick example: ("spider man", "robot mech").blend(1, 0.8) can render:

Upsampling

The upsample feature has been painfully slow since its inception. After a massive spike in traffic (with many thanks to artech.cafe and our Iranian users - see below), this has finally been moved across to our newer infrastructure, and is super fast now ![]()

Many bugs were fixed, and again, thanks to the artech.cafe user base for reporting these and for your patience. One small bug remains but it happens very infrequently and you can simply push the button again afterwards for it to work. We’re still trying to fix though ![]()

As a reminder, you can send any outputted image (from Text 2 Image and other pages) straight to the Upsampler by tapping the image, selecting the “magic wand” icon, and choosing “Upsample”.

Above: 2.4s to upsample a 512x512 image (x4)

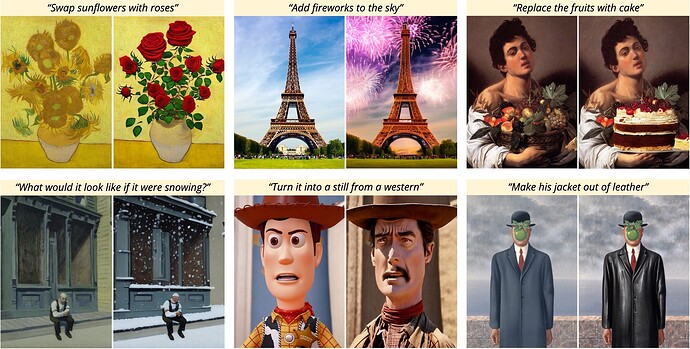

Instruct Pix2Pix

Available from side menu, start page, and as a “magic wand” target from any outputted image (by tapping the image to reveal the image options), I’m super excited to announce a new feature to the site: Instruct Pix2Pix.

This is a specialised model trained on editing instructions for image-to-image work. A picture is a thousand words, so:

The results are fantastic but it does require fine-tuning the settings for different types of images (particularly the prompt CFG scale and image CFG scale). Make sure to read the “What is this? & Important Tips” dropdown at the top of the page.

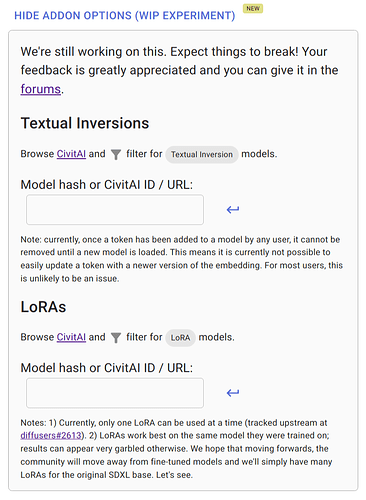

Addons (Textual Inversions and LoRAs)

Very excited to announce the ability to add Textual Inversions and LoRAs from CivitAI to the various models and pipelines. Just note that, especially the LoRAs, work better on the models they were trained on… using them on other “compatible” models (even if they share the same “base” model) can lead to very unpredictable and poor results (notably, very pixelated and deformed images).

SDXL

- SDXL 1.0 was released and is now available in the list of models

- SDXL 1.0 refiner – coming soon to Image2Image

- LoRAs - working

- Textual Inversions - coming soon

Pricing

Older users will recall that initially every image costed 1 credit. This was greatly reduced in Dec/Jan after a lot of slowness and instability at the time from our upstream provider. We decided to develop an alternative in-house and dropped the image cost to 0.25 credits for 6 months now while we worked out all the bugs. It has proved itself to be pretty stable.

Kiri is an open source project developed in my spare time. I’ve tried to keep it as cheap as possible because the tech excites me and I want to make it accessible to as many people as possible. However, all features of the site require a GPU to run, and GPU rental is expensive! I’ve spent a lot of time and effort to keep costs down but ultimately I’ve lost about USD 7,000 on this project and can’t keep this up anymore.

The cost of credits will remain the same (for now), but the credit cost per image will have to go up. It will also be a more variable cost based on image size and num_inference_steps, as these add up to more GPU time, unfortunately. The request queue will have 3 priorities:

- 1st class - using paid credits

- 2nd class - using free credits but have spare paid credits

- 3rd class - using free credits, don’t own any paid credits

I think this is the most fair but feedback is welcome. I’ve investigated adverts too but its not really the route I want to go. Perhaps reward videos, where you watch a video to earn a credit. We may also adjust the number of free daily credits, with “veteran” users receiving more than newer users. Let’s see.

The dollar cost of credits, the credit cost of generations, the number of daily free credits, are not final and are likely to be adjusted here and there in the coming days and weeks.

Where does the money go? To pay for servers and recover my losses. If I manage to make the site profitable, it will fund my continued development of new features, and allow for additional and faster servers. And if it becomes really successful, I’d really love to be able to compensate model authors for their hard work, based on paid credits used on their model (if the model license allows commercial image generation, at least).

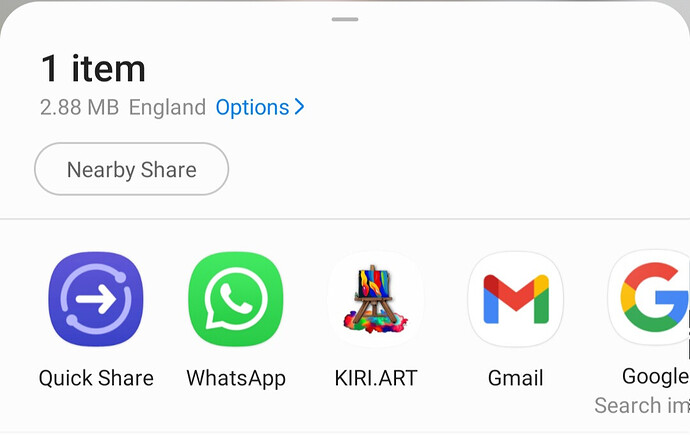

Share Target

Kiri.Art is a Progressive Web App (“PWA”) that can be installed on your mobile device and behave like an App (if you previously ignored this option, you can still find it in the menu of your mobile browser).

Kiri.Art is now a “share target”. If, for example, you open a picture in your phone’s Gallery, you can now click “Share” and Kiri.Art will appear in the list of supported share targets. This will then open Kiri.Art and you can choose to send it to Image2Image, Inpaint, Upsample or Instruct Pix2Pix, instead of having to open the files there by hand.

If you’ve already installed Kiri.Art on your phone, you’ll need to uninstall and re-install it to gain access to this feature.

Inpainting

Various fixes and improvements have been made over the last month… if you haven’t used Inpainting in a while, it’s better than ever. For best results, use an inpainting model. In the future, we’ll suggest these first instead of showing all models in the list.

Thanks to artech.cafe and our Iranian users

![]() artech.cafe is a super popular photographic page based in Iran with over 200,000 followers. In July, they did a feature post on kiri.art, particularly our Upsampling feature.

artech.cafe is a super popular photographic page based in Iran with over 200,000 followers. In July, they did a feature post on kiri.art, particularly our Upsampling feature.

The sudden influx of users and requests caught me off guard, and I’d like to apologise for all the issues that occurred in the days following the post. On the upside, see the note about Upsampling above for all the fixes and improvements that followed.

I really have to give a massive thanks artech.cafe’s admin, for his fantastic communication with me throughout all these issues, and especially, to all our Iranian users for your patience and support! ![]()

Downtime on Mon Aug 14th

Our servers were terminated without any warning or notice to us ![]() I apologise for that, its still not clear what happened. In the longer term, we’re working on code to detect and recover from such cases automatically, but in the meantime, it’s only when I notice or when someone reports an issue and I can manually configure a new server

I apologise for that, its still not clear what happened. In the longer term, we’re working on code to detect and recover from such cases automatically, but in the meantime, it’s only when I notice or when someone reports an issue and I can manually configure a new server ![]()

As usual, whenever we bring a new server online, all the models will need to be downloaded again the first time they’re used. Sorry about that.

We’ve also noticed that occasionally the queue gets stuck and have hopefully fixed this now.

Would love to hear from you!

We’re approaching our 1 year birthday, and would love to hear from you. We’ve never managed to elicit much feedback from users and I hope the forums will change that.

- What do you think about all the recent changes?

- What are your favourite and least favourite parts of kiri.art?

- How are we better or worse than alternatives?

- What are you using kiri for?

- What would you like to see next?

Thanks, all ![]()

![]()