This FAQ is about Banana’s infrastracture only. For support on specific models, post to the appropriate models category (and see their respective FAQs, e.g. for Stable Diffusion).

Pricing

-

$.00025996/second [1]. Seconds include model load time, inference time and idle timeout. More info about these in the (with example pricing calculations) billling docs.

-

That’s about $685/mo, i.e. the cost of a single container were it to be online 24/7 (e.g. if you set minimum_replicas=1 and you never had more than one request coming in at time). With the default configuration of minimum_replicas=0 and timeout=10s, you’ll be paying much less this! See the examples in the link from the previous bullet point.

Hardware

- Nvidia A100-40GB machines, but notably, Banana containers are limited to 16GB [2] since multiple containers share a single GPU card. Flexible RAM sizing is on the roadmap but not in the immediate future.

Limits

-

50mb incoming payload (the

HTTP POSTJSON sent to the container) -

Storage: fair-use “more than 20TB to store deployments but please be kind to us. we don’t cap storage rn” [3]

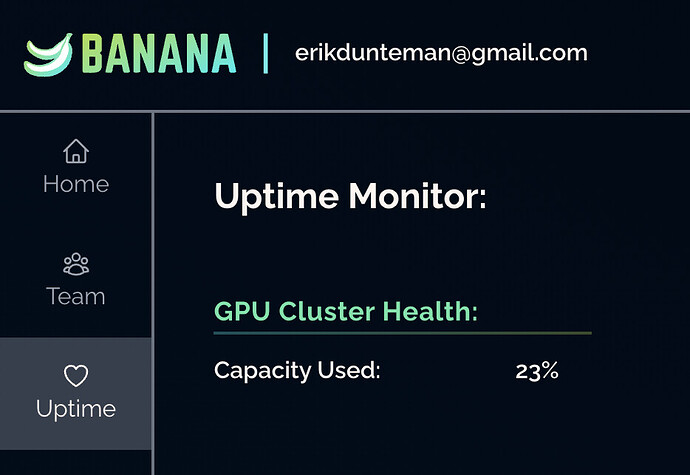

Capacity

-

“Capacity related issues do not affect servers that are currently running, so while we work on keeping capacity ahead of growth and improving reliability, for mission critical workloads like demos, it may be helpful to flip min replicas to 1 or more ahead of the demo to ensure you get capacity. Not the ideal solution, but hopefully a helpful way to think about it as we get through these growth pains” [4]

Related Services

- Banana HQ is in San Francisco, so us-west is a good region to use for other services that you need to communicate with the container (S3, etc).