Introduction

Warning: This is still under active development and only for the truly adventurous

You need the dev branch of docker-diffusers-api (since 2022-11-22) until this is released into main. It’s still very new and will undergo further development based on feedback of early users.

Dreambooth is a method to personalize text2image models like stable diffusion given just a few (3~5) images of a subject. Here we provide a REST API around diffuser’s dreambooth implementation (consider reading that first if you haven’t already).

There are two steps to using dreambooth. Each step happens in a separate container with their own build-args (but with the same docker-diffusers-api source).

- Fine-tuning an existing model with your new pics (and then uploading the fine-tuned model somewhere after training)

- Using your new model (deploying a new container that will download it at build time for nice and fast cold starts).

Let’s take a look at each step.

Training / Fine-tuning

Deploy a new repo with the following build-args (either via Banana’s dashboard, or by editing the Dockerfile in the appropriate places, committing and pushing).

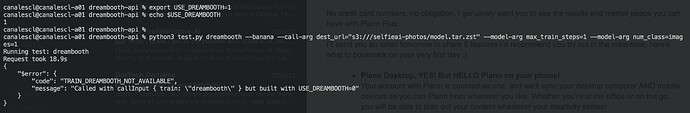

USE_DREAMBOOTH=1-

PRECISION=""(download fp32 weights needed for training, output still defaults to fp16) -

For S3 only, set

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEYandAWS_DEFAULT_REGION. Don’t setAWS_S3_ENDPOINT_URLunless your non-Amazon S3-compatible storage provider has told you exactly what to put here. More info in docs/storage.md.

Now either build and run locally, or deploy (e.g. to banana), and test with any of the following methods:

# For both options below, make sure BANANA_API_KEY and BANANA_MODEL_KEY

# are set. Or, for local testing, simply remove the --banana paramater

# to have test.py connect to localhost:8000.

# Upload to HuggingFace. Make sure your hf token has read/write access!

$ python test.py dreambooth --banana \

--model-arg hub_model_id="huggingFaceUsername/modelName" \

--model-arg push_to_hub=True

# Upload to S3 (note the triple forwardslash /// below)

$ python test.py dreambooth --banana \

--call-arg dest_url="s3:///bucket/model.tar.zst"

# Prior loss preservation, add:

--model-arg with_prior_preservation=true \

--model-arg prior_loss_weight=1.0 \

--model-arg class_prompt="a photo of dog"

# One iteration only (great to test your workflow), add:

--model-arg max_train_steps=1 \

--model-arg num_class_images=1

NB: this is running the dreambooth test from test.py which has a number of important JSON defaults that get sent along too. The default test trains with the dog pictures in tests/fixtures/dreambooth with prompt photo of sks dog. The --call-arg and --model-arg parameters allow you to override and add to these test defaults.

Alternatively, in your own code, you can send the full JSON yourself:

{

"modelInputs": {

"instance_prompt": "a photo of sks dog",

"instance_images": [ b64encoded_image1, image2, etc ],

// Option 1: upload to HuggingFace (see notes below)

// Make sure your HF API token has read/write access.

"hub_model_id": "huggingFaceUsername/targetModelName",

"push_to_hub": True,

},

"callInputs": {

"MODEL_ID": "runwayml/stable-diffusion-v1-5",

"PIPELINE": "StableDiffusionPipeline",

"SCHEDULER": "DDPMScheduler", // train_dreambooth default

"train": "dreambooth",

// Option 2: store on S3. Note the **s3:///* (x3). See notes below.

"dest_url": "s3:///bucket/filename.tar.zst".

},

}

Other Options

-

modelInputs

-

mixed_precision(default:"fp16"): this takes half the training time and produces smaller models. If you want to, instead, create a new, full precisionfp32model, pass the modelInput{ "mixed_precision": "no" }. -

resolution(default:512): set to 768 for Stable Diffusion 2 models (except for-basevariants).

-

Using your fine-tuned model (Inference)

Now you need to deploy another docker-diffusers-api that’s built against it, with the following build-args (again, either through Banana dashboard, or via editing the Dockerfile):

-

If you uploaded to HuggingFace:

- Set

MODEL_ID=<<hub_model_id>>from before

- Set

-

If you uploaded to S3:

- Set

MODEL_IDto an arbitrary (unique) name. - Set

MODEL_URL="s3:///bucket/model.tar.zst"(filename from previous step) - Note the three backslashes in the beginning (see the notes below).

- Set

That’s it! Docker-diffusers-api will download your model at build time and be ready for super fast inference.

As usual, you can override one of the default tests to specifically test your new model, e.g.:

$ python test.py txt2img --banana \

--call-arg MODEL_ID="<your_MODEL_ID_used_above>" \

--model-arg prompt="sks dog"

Roadmap

- Status updates

-

S3 supportdone, see example above and notes below.

With an eye to the future, I know both banana-team and I would love an API to deploy your model automatically on completion, I’ll speak to them about this after I finish the fundamentals first. ALSO, may consider allowing a container to download your model at run time, there are some use-cases for this, but don’t forget, it will be much slower.

Known Issues

Nofp16support pending #1247 Attempting to unscale FP16 gradients-

No xformers / memory_efficient_attention- working! -

No- example above!prior-preservation Not tested on banana yet. From what I recall, the timeout limit was raised, but I don’t remember if this has to be requested. I’ll check closer to official release time. Also, would be great to have the fp16 + xformers stuff fixed before, as that will greatly speed up training. But focused on the basics first.-

Banana runtime logs have a length limit! If you see (in the runtime logs) that training gets “stuck” early on (at around the 100 iteration point?), fear not… your

run()/check() will still complete in the end after your model has been uploaded. I’ll look into making the logs… shorter

Storage

This was explained above, but here’s a summary:

-

HuggingFace: HuggingFace allows for unlimited private models even on their free plan, and is super well integrated into the diffusers library (and you already have your token all set up and in the repo! – just make sure your token has read/write access).

HuggingFace: HuggingFace allows for unlimited private models even on their free plan, and is super well integrated into the diffusers library (and you already have your token all set up and in the repo! – just make sure your token has read/write access).However, HuggingFace is also much slower. On initial tests from a Banana instance, for a 4GB average model, looking at roughly 5 MB/s (40Mbps) or 15m to upload. So, although you’re not paying for storage, you’ll end up paying much more to Banana because you’re paying for those upload seconds with GPU seconds

-

Upload: set

{ modelInputs: { hub_model_id: "hfUserName/modelName", push_to_hub: True }} -

Download on build: set build-arg

MODEL_ID=<hub_model_id_above>, that’s it!

-

Upload: set

-

S3 - see docs/storage.md. For an average 4GB model, from Banana to AWS

S3 - see docs/storage.md. For an average 4GB model, from Banana to AWS us-west-1, 60 MB/s (480 Mbps), or 1m. This works out to 1/15th of the time/cost of GPU seconds to upload to HuggingFace.Make sure to set the following build-args:

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY,AWS_DEFAULT_REGION. Do not setAWS_S3_ENDPOINT_URLunless your non-Amazon S3-compatible storage provider has told you you need it. More info on S3-related build-args in docs/storage.md.-

Uploads - set e.g.

{ callInputs: { dest_url: "s3:///bucket/model.tar.zst" } } -

Download on build - set e.g.

MODEL_URL="s3:///bucket/model.tar.zst" - Development - optionally run your own local S3 server with 1 command!

- NB: note that it’s S3:/// at the beginning (three forward slashes). More deails on this format in the storage doc above.

-

Uploads - set e.g.

Acknowledgements

-

Massive thanks to all our dreambooth early adopters: Klaudioz, hihihi, grf, Martin_Rauscher and jochemstoel. There is no way we would have reached this point without them.

-

Special thanks to @Klaudioz, one of our earliest adopters, who patiently ploughed through our poor documentation (at the time), and helped us improve it for everyone! The detailed, clear docs you see above are largely a result of our back-and-forth in the thread below.