What is It?

Safetensors is a newer model format sporting super fast model loading. Coupled with other advancements in both in diffusers and docker-diffusers-api, model init time has gotten super fast, and outperforms Banana’s current optimization methods (see a comparison below).

We’ve provided links to the safetensors versions of common models below. The latest versions of docker-diffusers-api can also convert any model (diffusers or ckpt) to safetensors format for you and store on your own S3 compatible storage (and was used to create the models below). These converted models can be downloaded and built into your image, for those that want it (e.g. on Banana).

How to use it

Consuming a previously converted model

Download at Build Time (e.g. for Banana)

Use the “build-dowload” repo:

(or the runpod variant).

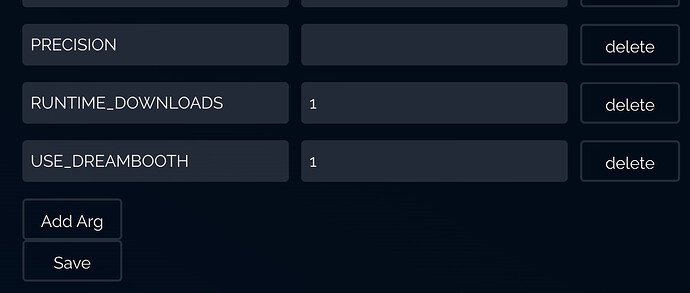

See the README there and set the build-args appropriately, particularly MODEL_URL. Use one of the prebuilt models below, or, if you have your own S3-compatible storage setup, give either the full S3 URL (according to Storage docs), or set to s3:// to download from default_bucket/models--REPO_ID--MODEL_ID--PRECISION.tar.zst.

NB: current known issue where build args don’t override values I set previousy in the build, working on this, but please set any vars using ENV line only for now.

Runtime

TODO

Producing your own converted models

Requires S3-compatible storage

Set up the default, regular repo (i.e., not the “build-dowload” variant). The default build allows for runtime downloads of models. Call it like this:

$ python test.py txt2img \

--call-arg MODEL_ID="stabilityai/stable-diffusion-2-1-base"

--call-arg MODEL_URL="s3://"

--call-arg MODEL_PRECISION="fp16"

--call-arg MODEL_REVISION="fp16"

Docker-diffusers-api will download the model, convert to safetensors format, and upload the archive to your S3-compatible bucket. CHECKPOINT_URL is supported too to convert .ckpt files. It will also return an image when the process completes.

Prebuilt models

Note, these downloads are rate-limited and shouldn’t be considered the full speed of Cloudflare R2.

- models–stabilityai–stable-diffusion-2-1–fp16.tar.zst

- models–stabilityai–stable-diffusion-2-1-base–fp16.tar.zst

- models–stabilityai–stable-diffusion-2–fp16.tar.zst

- models–stabilityai–stable-diffusion-2-base–fp16.tar.zst

- models–runwayml–stable-diffusion-v1-5–fp16.tar.zst

- models–runwayml–stable-diffusion-inpainting–fp16.tar.zst

- models–CompVis–stable-diffusion-v1-4–fp16.tar.zst

Comparison to Banana’s Optimization

A quick look through https://kiri.art/logs reveals:

- Banana optimization: 2.3s - 6.4s init time (usually around 3.0s)

- Our optimization: 2.0s - 2.5s init time (usually about 2.2s)*

*Our optimization was tested in a limited period, it could vary more.

Why we’re stopping to support to Banana’s Optimization

- It’s incredibly fickle… small changes can break it.

- It’s incredibly slow and is the main reason why banana builds are so slow.

- There’s no failure explanation. If it fails, we can just have to try one thing after another, wait 1hr+ to see if it worked, and try again.

- There’s no way to test locally, as above.

- There has been a loooot of downtime recently, and when its down, we still get the regular failure message, with no idea that it’s system wide and not in our own code.

- It’s super limiting… I’ve had to avoid many improvements previously because they would break optimization.

- Our own optimization is faster anyways.

I really hope Banana will give us a way to opt out of optimization in future, as it would really speed up deployments!

Questions, issues, etc

- (Banana) “Warning: Optimization Failed”. You can safely ignore this warning, we don’t use banana’s optimization anymore. We already optimized the model in the previous step. You can be sure it’s working based on the fast load time (around 2.2s init time vs 30s init time).